Having done extensive research (a quick google search that threw up

this excellent and well-referenced post but nothing much else; and reading comments at RC and elsewhere) its pretty clear to me that (a) almost no-one outside the immeadiate community knows how coupled ocean-atmosphere GCMs work and are used in climate modelling and prediction (or "projection" as the IPCC calls it); and (b) this may be because there are no webpages on it. If you fancy reading some GCM source code, then

this will get you

GISS ModelE; or

this for HadCM3. But you're unlikely to learn much from it unless you're *very* determined.

So I'm going to write up a post on it. What I hope to do is produce a first draft here, publish it, get feedback from you lot on bits that are unclear (or mistaken? no...; still the ocean bit is thin) or missing, and update it until adequate. Or until I get bored. Also please comment if you can find a better description elsewhere.

This from the Met Office is an example of something thats not much use...

For definiteness, I'm going to talk about coupled-atmos-ocean GCMs (AOGCMs, though I'll probably just say GCMs) which are the heavyweight tool for climate prediction. You can't do that with an atmos-only model. And the only ones I'm at all familiar with are HadCM3/HadGEM.

Components

AOGCMs have two main components (atmosphere and ocean of course) and two more minor components (sea ice and land surface). I suppose sea ice modellers (me!) or land surface folk might complain about me calling them minor. Delete the word if it offends you. Traditionally the land surface scheme sits inside the atmos model, and might well be considered part of it. The sea ice scheme might well sit inside the ocean model. Mostly.

Those are (I think) the essential bits. You can also have various optional bits (for example carbon cycle or atmospheric chemistry) but those are not needed. One very common mistake is to think that GCMs predict CO2 levels. Most don't. Most are run with observed CO2 (if post-dicting the past) or prescribed CO2 (either from an economic model or an idealised 1% increase, say) is predicting the future. Even a carbon cycle model would be run with prescribed anthro CO2 inputs. Most GCMs don't contain a glacier or icesheet model either, because the scales are incompatible: glaciers are too small, and ice sheets have millenial scales (HadCM3 has been run with a Greenland ice sheet, but only once, it took ages, and I think it was specially speeded up).

I'll add a forcings section at the bottom.

Discretisation and resolution

I'll only say a bit about this.

This seems quite helpful.

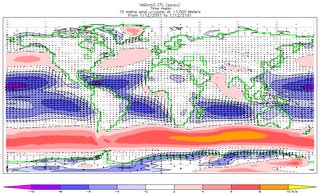

For the atmosphere and the ocean the basic fluid dynamics equations need to be converted from their continuous (partial differential equation) form and discretised so that they can be handled by numerical approximation. For the atmosphere, this can take the form of a spectral or finite difference decomposition. I'm not going to talk about the spectral stuff, cos it will only confuse, and the end results are not much different. For the oceans you can't use spectral stuff anyway. What happens then is that instead of a continuous equation d(f)/dt=g(x,t) you end up with something like f_{x,t+dt}=f_{x,t}+G({x,t},{x-dx,t},{x+dx,t})... I'm handwaving for effect here (apart from anything else in a GCM the x's are 3D (lat, long and height)). The point is to end up with an expression for the values at time t+dt, in terms of things at time t (or use an implicit solution...). But anyway, this gives you two important parameters to choose: the timestep, dt; and the spatial discretisation dx.

Typical values for the atmosphere are 1/2 hour (or less) for the timestep; and 300 km for the horizontal; and about 20-40 levels in the vertical (not evenly spaced). At least for HadCM3 the ocean timestep is longer (1h) and the spatial less (1.25 degrees, about 100 km).

Space and time steps are related by the CFL criterion: as the space steps get smaller so must the time, to avoid instability. Note that there is resource/accuracy trade of in the timestepping: longer timesteps allow the model to run faster; shorter timesteps allow more accurate integration. In practice, I think, people take the largest timestep compatible with stability, since errors elsewhere mean the loss of accuracy from as large as possible timestep doesn't matter.

This pic gives you some idea of the grid cell size;

this has refs and stuff.

Atmosphere

The atmosphere sort-of divides into two components: a dynamical core to handle the discretisation of the fluid dynamics; and a pile of physical parametrisations to handle things (clouds, for example) that don't get a fluid-dyn representation. Also radiation.

So the dynamical core handles the integration (i.e., getting from one time step to the next) of [u,v] (horizontal velocity and the various vertical levels) and p* (surface pressure) and omega (vertical velocity). Once the winds are known, other variables (q, moisture) can be advected around. It is generally reckoned that the GCM type scale (200-300 km gridpoints) is enough to resolve most of the energetic scales in the atmosphere.

At some point the bottom layer of the atmosphere needs to exchange fluxes (momentum and heat and moisture) with the surface, which is where the surface exchange scheme comes in, which counts as part of the atmosphere. Models typically have their lowest level at a few 10's of meters, which requires a parametrisation of the boundary layer exchange, point by point.

The radiation code handles the short wave (visible; solar) fluxes and the long-wave (infra-red) fluxes separately (since there is little overlap). The vertical column above each grid point is treated separately from the ones next door (since the cells are 100's of km wide but only 10's of km high, edge effects get neglected). SW comes in at the top, gets reflected, diffused, absorbed and generally bounced around of the atmos, the clouds and the sfc. Similarly the LW bounces around but also has sources. The radiation code, effectively, is the bit where enhanced CO2 (or other GHG forcing) gets inserted, by affecting the transmissivity of the atmosphere. In the real world radiation has a continuous spectrum (with lines in it...); in line-by-line codes thousands of lines and continua are specified; in GCM type codes each of the SW and LW radiation codes will deal with a small (~10) number of bands which amalgamate important lines and continua. Radiation codes are expensive: HadAM3 only calls the SW radiation 8 times a day.

There are separate schemes for the convective clouds and "large scale" clouds. LS clouds are those that are effectively resolved: if a grid box cools enough to get the cloud scheme invoked, then clouds form (once upon a time, this happened if the RH got above 100% (or perhaps 95%, with some ramping); nowadays I think its more complex). Convective clouds require a parametrisation: again this has evolved: once if a part of the column was convectively unstable it got overturned; now much more complex schemes exist. There is a lot of scope for different schemes I think. Ppn gets to fall as rain or snow according to temperature; it may re-evaporate on the way down if it falls through a dry layer. Once you have the clouds they need to feed into the radiation scheme. Clouds may be true model prognostics or get diagnosed at each timestep.

Ocean

I know less about the ocean code. The ocean is different in that it has boundaries. It also has (in the real world) more energy at smaller spatial scales and so is rather harder to get down to a resolution which properly resolves it. But still there is a dynamical core which solves for the transport.

Radiation pretty well gets absorbed in the upper layers so is less interesting than in the atmos case. Convection is rather less common, and mostly associated with brine rejection from sea ice (?), which needs parametrisation just like cumulus convection in the atmosphere.

Unlike the atmosphere, which exchanges interesting fluxes with the land surface, the bottom of the ocean isn't very interesting.

Sea ice

Sea ice is effectively an interface between atmos and ocean and insulates one from the other. It gets pushed by wind stress from the atmosphere, ocean-ice drag underneath, coriolis force and internal stresses (its is usually modelled as an (elastic) viscous plastic substance; the details of this are really quite interesting but complex).

In the Hadley models, it exists on the same grid as the ocean model. By affecting the albedo it also affects the ice-ocean interaction. If it has a different roughness length to the ocean, it will affect the momentum fluxes too.

Sea ice effectively splits into "dynamics" (moving it around) and "thermodynamics" (heat transfer through it, melting/freezing, albedo, etc).

Land surface

The land surface scheme needs to allow us to calculate the fluxes of heat, moisture and momentum with the atmosphere; and the radiative fluxes. Fortunately it doesn't move so doesn't need any dynamics so is often not a separate model.

Fluxes of heat are done by calculating the temperature at various depths in the soil which gets you a surface temperature, together with a surface roughness length (which depends on...). Fluxes of moisture are done by knowing the "soil" moisture based on some more or less sophisticated scheme (see

PILPS) which will also affect the way falling precipitation is handled. This includes representations of evapotranspiration etc etc. Momentum just needs a roughness length, the stability or otherwise of the atmos BL, and possibly some representation of the unresolved orography.

Most such schemes have prescribed vegetation; but more exciting ones can have interactive vegetation schemes (the UKMO is called TRIFFID).

Any of this gets affected by an overlying snow cover; which obviously affects the albedo, but also insulates.

Forcing

This will be short, since I suspect you can find it better elsewhere.

A fully coupled model needs an initialisation state (usually 1860 or thereabouts if its to be used from simulating 20C and the future, to avoid cold start and stuff), prescribed CO2 (and other minor GHG) concentrations which vary through time; solar forcing (variable or not); volcanic and other aerosols. It may also get a varying land use. And thats about it (did I forget anything?). The point being to let it get on with it.

People occaisionally suggest that it would be a good idea to run them in semi-NWP mode and assimilate weather obs along the way, so that they track 20C temps as accurately as possible and predict the future as well as poss. This is plausible (in some ways) but not done.

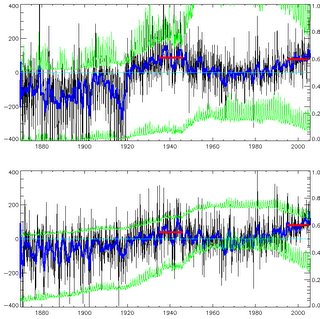

Output

At the end of all this, you end up with values of temperature, velocity, humidity, cloud at 2*10

5 atmospheric gridpoints (or thereabouts) together with more in the ocean and many another variable besides, every half hour, for 200 years (or however long). Assuming you bothered to save them.

Oddly enough that level of detail is often not what you want. So the first thing to look at tends to be an area-average (often global) and time average (monthly; yearly) values of one variable of particular interest.